I’ve always been worried about the way OpenGL renders the Saturn’s distorded polygons. As the Saturn doesn’t specify any Z coordinate (aka depth coordinate) when displaying a polygon, OpenGL has to approximate its value to apply a texture to it.

When the polygon is a regular quadrangle (ie a square, rectangle, etc. ), the texture coordinates and the polygon ones are identicals, so OpenGL texture mapping is correct. In the case of a distorded quadrangle, only half of the texture coordinates are identical to the polygon coordinates, and the texture seems to be mapped on the polygon as 2 different triangles. (OpenGL always splits quadrangles into 2 triangles as modern graphic cards only work with triangles)

Maybe a graphical example will be better to grasp the idea :

- original texture / texture coordinates (will be used to map the texture to the polygon)

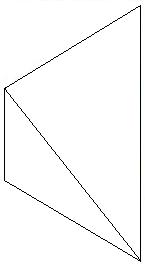

- texture coordinates + regular quadrangle coordinates (identical to the texture coordinates) = correct texture mapping on the quad

- texture coordinates + distorded quadrangle coordinates(different from the texture coordinates) = incorrect texture mapping on the quad

So I did some research, I wasn’t really sure that this problem could be solved without using a software renderer, but I was wrong. Using the texture projective space allows to change the way OpenGL maps the texture coordinates to the quad coordinates, rendering neatly distorded quads (I won’t enter in the details :p )

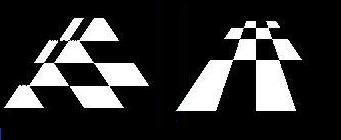

Here is a sample. I won’t use the same example as above as I haven’t yet implemented it in the VDP1 renderer, but the following screenshots were done through a test renderer in the emu. The left one is rendered like it was done so far, and the right one using the above technique. Both use a 4*4 black and white checkerboard as texture.